Astrophotography Diaries of a Rookie

Astrophotography combines some of my major passions in life: mathematics, astronomy, computers ... and buying gadgets!

Tuesday, June 20, 2023

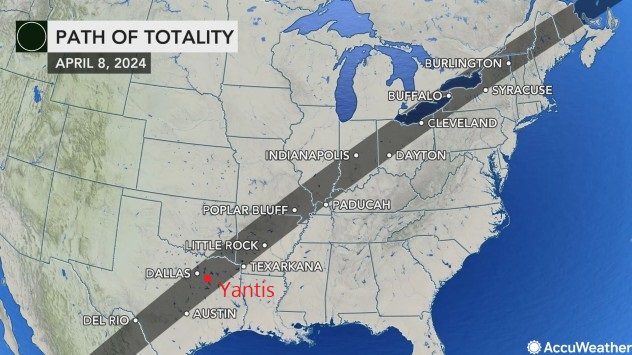

Preparing for next years Solar Eclipse

Saturday, May 29, 2021

Lunar Eclipse - What worked ... and what didn't.

- Setup of the scope (SkyGuider, polar alignment...) worked perfectly

- Lunar Eclipse Maestro worked well, but somewhere during the maximum, it stopped taking images - luckily I caught this and restarted it.

- I wish Lunar Eclipse Maestro would have taken two images at once - one for the bright and one for the dark part of the moon. HDR compositions might have looked really great!

- For both this and the timelapse, having the Nikon's directly powered by a 12V battery was perfect (though the batter was pretty much flat at the end of the night powering the cameras, and the laptop).

- It didn't work as a timelapse at all - the moon was just a really, really bright spot - and then less bright. But there were no details of the moon or such.

- It gave me that awesome Milky Way image - I love that.

- Somehow my field of view calculations were all wrong. The moon only went through half of the image. I.e. I could have shot at 24mm focal length - maybe that would have made a difference.

- Setting the eVscope up was as always a breeze.

- One of the tablets didn't have the latest eVscope software and couldn't connect (we had really poor reception up there)

- The images had a strong pink taint:

It was easy enough to process that out by adjusting the whitebalance. But for live observing we had live with a pink moon.

It was easy enough to process that out by adjusting the whitebalance. But for live observing we had live with a pink moon.

- As always, these are some of my favorites. Just walking around, trying different angles, exposures, focal length...

- Our spot was almost too perfect! I didn't have any objects (trees or such) that I could put in the foreground.

Wednesday, May 26, 2021

Lunar Eclipse

On the evening of May 25, Renate and I got ready - after all those preparations. All the cameras and equipment were in the car, hot chocolate, Twix Bars, Chips, water, Beef Jerky ... and warm clothes. We drove up to Lick Observatory (which is still closed due to the Covid-19). But finding a place wasn't easy. Most places where I was before had some obstruction (telescope, trees) to the south west. We drove a little bit down and found a perfect spot: someones driveway - with a big "No Parking" sign! But it was almost midnight and we hoped that whoever lived there wouldn't want to leave until 6am...

We set everything up, configured and started the Eclipse Maestro and qDslrDashboard timelapse ... and then enjoyed the event!

And took some great images:

The moon half eclipsed - crazy brightness difference!More images with the Moon and Silicon Valley. These are why I went up Mt. Hamilton!!!

Tuesday, May 25, 2021

Lunar Eclipse Imaging - Prep

Finally, an eclipse again - this time a lunar eclipse. I planned early and ended up preparing 4 different ways to image it:

- Closeup - using a tracker and Lunar Eclipse Maestro

- Ultra wide angle timelapse

- With the Unistellar eVscope

- Manual widescapes

Turned out that I had a lot to prepare:

1. Closeup

The first challenge was that Lunar Eclipse Maestro only runs on MacOS and not beyond Mojave. But Xavier Jubier (the author) told me that I can run it in a VM.

Enter VirtualBox from Oracle. Luckily I found great instructions on how to install MacOS Mojave on VirtualBox. Beyond that I had to:

- Pass the required USB ports to the VM. Which is fairly easy, you have to connect the USB devices and then select those devices to be available in the VM.

Note: DON'T select the external hard drive that runs the VM or the keyboard USB ports here!!! - The time on MacOS was constantly off. I had to install guest additions to fix that. Also, the calendar was by default set to "Persian" - had to change it to "Gregorian".

Being able to watch the eclipse up close on a tablet should be convenient. Especially when it will be cold and we can do it from inside the warm car!

Finally, I will use my 135mm and the 85-300mm lens for manually composing and shooting. Just using a tripod and remote shutter.

Sunday, March 7, 2021

ΔT and UTC data for 10Micron Mount

I used to download the ΔT and UTC data from the US Naval Observatory (USNO) server (maia.usno.navy.mil/ser7). But that was shutdown some time ago.

Took me a while to find an alternative, but they can be downloaded from this NASA server: https://cddis.nasa.gov/archive/products/iers/. It requires registration (for free!) but always has the latest data.

Sunday, September 27, 2020

First Light: M51

Another interesting aspect is the larger number of supernovae in M51. There have been supernovae in 1994, 2005 and 2011 - three supernovae in 17 years is much more than what we see in other galaxies. It's not clear what causes this - and if the encounter with NGC 5159 has something to do with it.

- Normal Calibration of the individual Frames

We realized that the shutter on the ML50100 camera isn't completely shut and let some light in. In order to take bias and dark frames, we had to cover the scope (I used a Telegizmo cover for a Dobsonian that we could pull over the entire scope, finder scope and mount: - Using the DefectMap process to correct dark (cold) and white (hot) columns.

- Equalizing all images.

- Using the SubframeSelector process to create weights for all images and mark the best images.

And just so that I don't forget the parameters:

Scale: 1x1: 0.48 2x2: 0.96

Gain: 0.632

Weighting: (15*(1-(FWHM-FWHMMin)/(FWHMMax-FWHMMin)) + 15*(1-(Eccentricity-EccentricityMin)/(EccentricityMax-EccentricityMin)) + 20*(SNRWeight-SNRWeightMin)/(SNRWeightMax-SNRWeightMin))+50 - Stack the images (I stacked all images against the best Ha image)

- Use the LocalNormalization process to improve normalization of all frames

- Use ImageIntegration to stack the images

- Use DrizzleIntegration to improve the stacked images

- Use LinearFit on all images

- Use DynamicBackgroundExtraction to remove any remaining gradients

- Use ColorCombine on the Red, Green and Luminance images to create a color image

- Use StarNet to remove the stars from the Ha image

- Use the NRGBCombination script to add the Ha data to the color image

- Use PhotometricColorCalibration to create the right color balance

- Use BackgroundNeutralization to create an even background

- Use SCNR to remove any green residues

- Stretch and process the image (no more noise reduction - the advantage of dark skies!!!)

- Enhancing the Feature Contrast (the Pixinsight tutorials from LightVortexAstronomy are awesome!!!)

- I then use the Convolution process on the RGB data to remove any processing noise in the colors.

- Process the Luminance image the same way

- Sharpen the image just so slightly

- Use LRGBCombination to apply the luminance image to the RGB image

- Do some final processing (usually just CurvesTransformation to drop the background a little and maybe adjust Lightness and Saturation to bring out the object better).

- Done!!!

Removing stars with StarNet++

- Creating a star mask and subtracting that from the image. Which leaves black holes in the image. It's not too bad as these black holes are where stars are. But if the stars in the star mask are not the same size then the real stars, some artifacts are left.

- I tried Straton - but could never get a clean image out of it.